There are some people that I personally thank.

I like to summarize my internship.

Best Moment in my Internship period

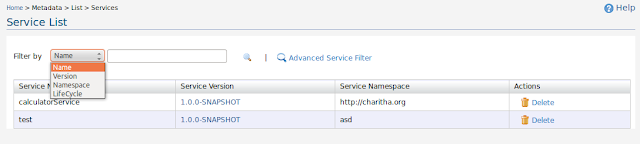

Committing my changes to the branch (in Greg project) – I worked in several projects (around 5). Only in 2 projects I got chance to add them to the branch. It was in test automation and in Greg basic filter improvement. It feels great when we see our changes in the branch, knowing that it will be there in the future and thousands of people gonna use it. And I hope all other futuristic projects I did will be used in future. (https://svn.wso2.com/wso2/repo/intern/malinga/)

Worst moment in my Internship period

Missing the BB finals – BB final was one of the moment I wanted to be in, form the time we started the BB tournament (read a blog article by a past wild boar member and how they win the final match in the last minute). But I missed it due to the bad weather.

Best feeling in my Internship period

Non tech guy knowing WSO2 – in the last few months almost all the people met me, asked about my training place. So my answer was “A Place call WSO2”. Most of them are non-tech or in different fields and didn't know about WSO2. But 1% of them replied “Ahh.. WSO2”. It feels great and I was like “You know WSO2 :D”.

Worst feeling in my Internship period

Returning to the room after losing each and every TT match played– Luckily it happens extremely rarely because I don’t return till I win at least a single match.

Contact Me

Email – malingaonfire@gmail.com

FB - https://www.facebook.com/romesh.perera.08

Linked in - http://lk.linkedin.com/in/malingaperera

Twitter - https://twitter.com/malingaperera

Blog on my Internship (Internship at WSO2) -

http://iwso2.blogspot.com/

Personal blogs - http://www.executioncycle.lkblog.com/

http://www.thinklikemalinga.lkblog.com/

OR you can simply Google “Romesh malinga perera”